How Do Generative AI Responses Work?

Key points of the article

- LLMs operate in two modes: knowledge base (training data) or real-time web search (RAG)

- AI decides to search the web when it detects uncertainty or needs fresh data

- Query fan-out breaks down each question into multiple sub-queries to explore the full semantic field

- ChatGPT uses ~25 sources per response vs ~10 for Perplexity, with only 6% of sources in common

- Sources come from top 10-30 SERPs: SEO remains the foundation of AEO

- AI favors well-structured, recent, and easily extractable content

- Structured data helps LLMs understand the context of your content

In 2026, a growing number of internet users no longer click on links. They ask their questions directly to ChatGPT, Perplexity, or Gemini and expect a synthesized answer.

But how do these AIs build their responses? Where does the information they cite come from?

To optimize your visibility in AI engines (what we call AEO — Answer Engine Optimization), you first need to understand how they work. That's what we'll break down in this article.

The Two Response Modes of LLMs

When you ask an AI a question, it can respond in two very different ways. Understanding this distinction is fundamental.

Mode 1: Response from the Knowledge Base

In this mode, the AI responds solely from what it learned during its training. It doesn't search for information on the Internet.

How does it actually work?

The model was trained on billions of texts: articles, books, websites, forums... During this training, it learned statistical relationships between words and concepts. When you ask it a question, it generates a response by predicting the most likely words in that context.

The LLM doesn't "know" in the human sense. It has learned patterns and generates coherent text based on those patterns.

The limitations of this mode:

- Knowledge cutoff: knowledge stops at the training date

- Hallucinations: if information is missing, the AI may invent a convincing but false answer

- No verification: no external source to validate claims

Mode 2: Response with Web Search (RAG)

In this mode, the AI combines its internal knowledge with external sources retrieved in real-time from the web. This is called RAG (Retrieval-Augmented Generation).

This is the mode that matters for AEO. When the AI cites sources, it means it has activated web search.

When Does AI Decide to Search the Web?

AI doesn't systematically launch a search. It first evaluates its level of uncertainty about the question asked.

Signals that trigger a web search:

- Queries that require fresh data (news, prices, recent events)

- Questions that demand verifiable information (figures, statistics, precise facts)

- Searches related to e-commerce (product comparisons, reviews, prices)

- Topics where the model detects uncertainty in its knowledge

Conversely, for general knowledge questions or well-established concepts, AI often responds directly from its knowledge base.

The Complete Process of a Web Search Response

When AI decides to search the web, it follows a multi-step process. This is where it gets interesting for understanding how to appear in its responses.

Step 1: Prompt Analysis

The AI analyzes your question to understand the search intent. It identifies:

- The main topic

- Implicit subtopics

- The expected level of detail

- Context (location, language, conversation history)

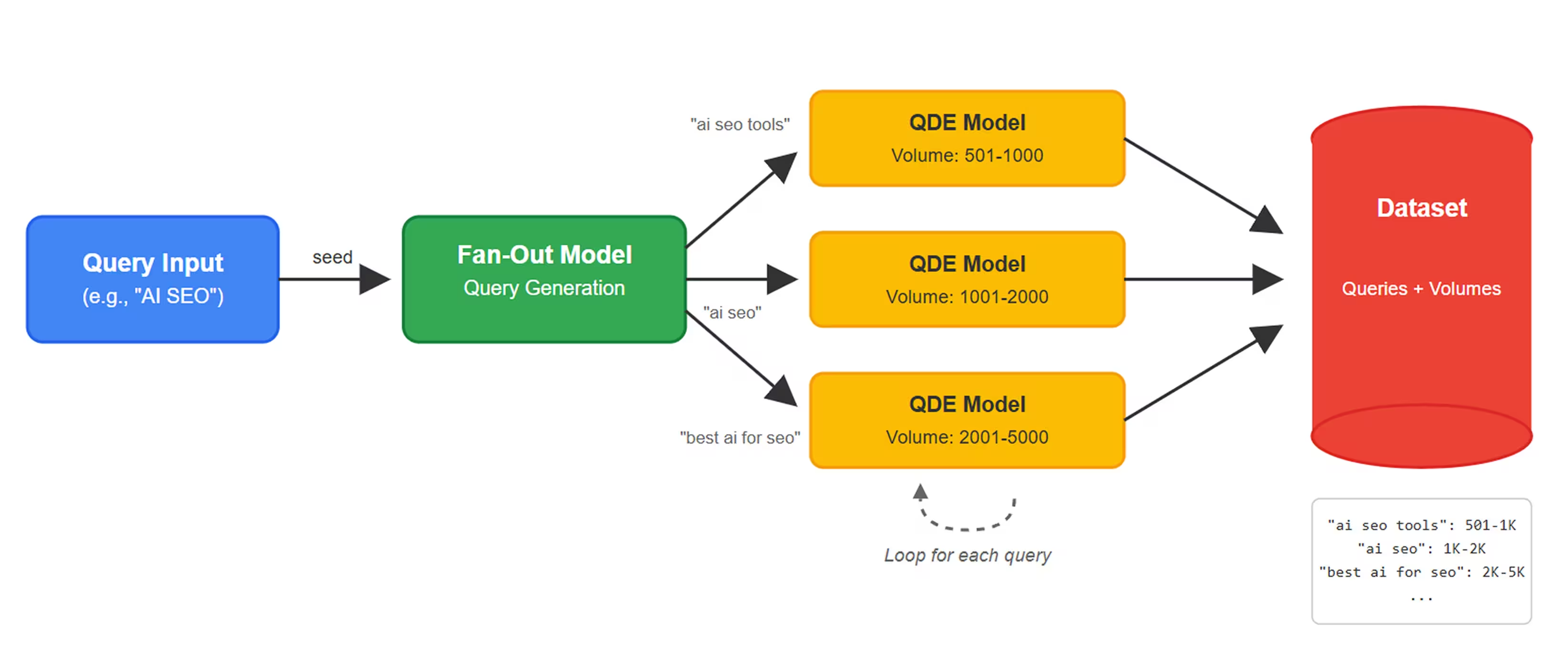

Step 2: Query Fan-Out (Decomposition into Sub-Queries)

This is the key step. The AI doesn't launch a single search matching your question. It breaks down your prompt into multiple sub-queries to explore different angles of the topic.

Concrete example:

You ask: "What's the best SEO strategy for an e-commerce site in 2026?"

The AI generates multiple sub-queries:

- "e-commerce SEO strategy 2026"

- "SEO trends 2026"

- "product page SEO optimization"

- "technical SEO e-commerce"

- "link building e-commerce"

- "Core Web Vitals SEO impact"

This mechanism is called query fan-out. It allows the AI to cover the entire semantic field of your question.

Key figures:

- ChatGPT launches an average of 2.5 searches per prompt

- ChatGPT uses an average of 25 sources per response

- Perplexity (free version) launches 1 search and uses about 10 sources

- ChatGPT sometimes formulates its queries in English, even for questions in other languages

Step 3: Search and Source Retrieval

The AI sends its sub-queries to search engines. Each LLM uses different sources:

- ChatGPT: mix of Google + Bing

- Perplexity: primarily Google

- Gemini: Google Search

- Copilot: Bing

Retrieved results generally come from the top 10 to top 30 SERPs. The AI doesn't go looking on page 5 of Google.

Important point for AEO: to appear in AI responses, you first need to be well-ranked in SEO for the sub-queries generated by query fan-out.

Step 4: Source Evaluation and Selection

The AI doesn't treat all sources equally. It evaluates:

- Relevance to the question

- Perceived reliability of the site (authority, E-E-A-T)

- Content freshness (publication/update date)

- Content structure (ease of information extraction)

Well-structured content with clear headings, direct answers, and factual data is prioritized. The AI needs to be able to easily extract information.

Step 5: Synthesis and Response Generation

The AI compiles information from different sources to generate a single, coherent response. It:

- Cross-references information to identify consensus

- Reformulates in a fluid, natural style

- Adds citations to sources used (depending on the LLM)

- Structures the response to be immediately usable

Diagram: From Prompt to Response

Differences Between LLMs

Not all LLMs work the same way. Here's what distinguishes the main ones.

ChatGPT (OpenAI)

ChatGPT offers two modes:

- Without web search: responds from its knowledge base (knowledge cutoff)

- With web search: activates browsing automatically or manually

When search is activated, ChatGPT is the most "source-hungry": 25 sources on average per response, with 2.5 searches per prompt.

Distinctive feature: ChatGPT often formulates part of its queries in English, even for questions in other languages.

Perplexity

Perplexity is always connected to the web. That's its purpose: an answer engine, not a chatbot.

It primarily uses Google as a source and systematically displays citations with links to sources. The free version launches about 1 search per prompt and uses about 10 sources.

Perplexity formulates queries very close to the original prompt, unlike ChatGPT which explores more broadly.

Google AI Overviews / AI Mode

Google AI Overviews (formerly SGE) and AI Mode are integrated directly into Google search results.

The advantage: they rely on the complete Google index. Cited sources are generally those appearing in the classic top 10 SERPs.

This is the LLM where traditional SEO has the most direct impact on AI visibility.

Gemini

Gemini (Google) works similarly to AI Overviews but in a conversational interface. It uses Google Search for its searches and can access real-time data.

Claude (Anthropic)

Claude primarily operates without web search in its standard version. It responds from its knowledge base with a knowledge cutoff date.

Some integrations (like Claude in third-party tools) can add search capabilities, but it's not native.

What This Means for Your Visibility

Now that we understand how it works, what does this concretely mean for appearing in AI responses?

SEO Remains the Foundation

To appear in AI sources, you first need to be well-ranked on Google and Bing. LLMs pull their sources from the top 10-30 SERPs.

No shortcuts: AEO starts with good SEO.

Cover the Complete Semantic Field

With query fan-out, AI doesn't search for your exact keyword. It explores the entire semantic field around the question.

Consequence: your content must cover a topic comprehensively, not just answer a specific query. Topic clusters and pillar content make complete sense here.

Make Information Extraction Easy

AI needs to be able to easily extract information from your content. For this:

- Clear headings (H2, H3) that clearly announce the content

- Direct answers to questions in the first paragraphs

- Lists and tables for factual information

- Short paragraphs (2-4 sentences max per idea)

AI-friendly content is above all human-friendly content. If a reader can scan your article and quickly find the information they're looking for, so can AI.

Structured Data

Structured data (Schema.org) helps AI understand the nature and context of your content. It allows clear identification of:

- Who is the author (credibility, E-E-A-T)

- What entity is described (company, product, person)

- What are the questions/answers (FAQPage)

- What is the publication/update date

👉 We've written a complete guide on this topic: What Structured Data to Implement for SEO and AEO?

Content Freshness

AI favors recent and updated content. A 2022 article will have less chance of being cited than content updated in 2026.

Remember to update your key content and clearly indicate the last modification date.

Our Perspective at Vydera

At Vydera, we've been helping our clients with AEO for several months. Here's what we observe in the field.

AEO is not a revolution, it's an evolution.

The fundamentals remain the same: create quality content, well-structured, that truly answers users' questions. What changes is the importance of certain signals that were sometimes overlooked:

- Complete semantic coverage of a topic (not just a keyword)

- Editorial structure that facilitates extraction

- Structured data that makes context explicit

- Freshness and regular updates

What surprises us:

Only 6% of sources are shared between ChatGPT and Perplexity for the same prompt. This means that to be visible on both, you potentially need to double your efforts. Each LLM has its own selection criteria.

Our advice:

Don't start from scratch. Begin by analyzing how your brand appears (or doesn't) in AI responses for your key queries. Identify the sources cited by LLMs in your niche. Then optimize your existing content before creating new ones.

Conclusion

Generative AI doesn't "search" for an answer like a traditional search engine. It builds one by combining its internal knowledge and web sources retrieved in real-time.

The query fan-out mechanism is central: AI breaks down your question into sub-queries to explore the entire semantic field. To appear in its responses, you need to:

- Rank well in SEO for potential sub-queries

- Cover the topic comprehensively (topic clusters)

- Structure content to facilitate information extraction

- Implement structured data to make context explicit

- Maintain content freshness

AEO starts with good SEO. But it requires a more comprehensive approach, focused on semantic coverage and structural quality of content.

Without web search, AI responds solely from its training data with a fixed knowledge cutoff date and risk of hallucination. With web search (RAG mode), it queries Google or Bing in real-time to retrieve up-to-date sources and generate a more reliable, well-sourced response.

Query fan-out is the mechanism by which AI breaks down your question into multiple sub-queries before launching its searches. For example, a question about e-commerce SEO strategy generates searches on trends, technical SEO, link building, etc. This allows covering the entire topic comprehensively.

Each LLM uses different search engines (ChatGPT: Google + Bing / Perplexity: Google), formulates its queries differently, and selects sources according to its own criteria. Result: only 6% of sources are shared between the two for the same prompt.

First, you need to rank well in SEO because LLMs pull their sources from the top 10-30 Google/Bing results. Then, structure your content for easy extraction (clear headings, direct answers, short paragraphs) and implement structured data to provide explicit context.

Yes, SEO remains fundamental for AEO. AI doesn't create its own sources: it retrieves content that already ranks well on search engines. Without SEO visibility on the sub-queries generated by query fan-out, your content won't be included in LLM responses.

.svg)